|

Hi Beautiful People. Today I'm going to talk about a technique called Stable Diffusion, which is a technique that seems to occur time and time again in the field of Generative AI. Firstly Generative AI stands for Generative Artificial Intelligence and is a collection of AI techniques that allow you produce new things. This could be works of art, real-life pictures or the creation of new types of music. In particularly the latest tool that is doing the rounds is OpenAI's DALL-E, which uses stable diffusion and ChatGPT, which uses a transformer technique.. If you haven't heard of either of those, you must of been living under a rock, so feel free to learn more by experimentation in my previous blog post:

www.digitalcolmer.com/blog/-how-useful-is-generative-artificial-intelligence What is Stable Diffiusion? Stable diffusion is a mathematical process that describes how a something spreads out over time in a stable manner. It is a type of diffusion process in which the distribution of the quantity remains unchanged over time. In other words, the spread of the quantity is consistent and does not vary significantly over time. Stable diffusion processes are often used to model physical phenomena, such as the movement of particles in a fluid or the spread of a chemical through a substance. They can also be used to model the spread of information or ideas within a population. In Generative AI, stable diffusion is a deep learning, text-to-image model. Deep Learning is a technique that helps computers learn in a similar manner to the brain. It does this using a neural network and learns over time, very similar to how a human learns by experimentation over time. Text-to-image models are used in AI to help generate new images from a simple sentence, just like DALL-E above. Here is a great video from OpenAI, that explains very simply how DALL-E works: www.youtube.com/watch?v=qTgPSKKjfVg&t=2s How is it used in Generative AI? Stable diffusion processes can be used in generative AI in a variety of ways. One example is the use of a generative model that is trained to generate new, synthetic data that is similar to a training dataset. Generative models can be used to synthesize a wide variety of data, including images, text, and audio. One way that stable diffusion is used in a generative model is as a way to model the distribution of data in the training dataset. For example, if the training dataset consists of images of faces, a stable diffusion process could be used to model the distribution of features in the images, such as the shapes of the faces and the colors of the eyes. This could help the generative model to generate new images of faces that are realistic and consistent with the training data. Another way that stable diffusion can be used in generative AI is as a way to explore the space of possible data points that could be generated by a model. For example, a stable diffusion process could be used to generate a sequence of data points that are drawn from the distribution modeled by a generative model. This could be used to explore the range of outputs that the model is capable of producing, or to identify areas of the data space that are poorly represented in the training data. This could help generate music that is not random and is ordered according to a harmonic major or minor system. This would produce music that is pleasing to the ear and could be used in film, tv or pop music. Here is another great video that talks about Stable Diffusion. It starts off simple but then gets deeper and deeper. Simply click the stop button when you've had enough: www.youtube.com/watch?v=1CIpzeNxIhU I hope you enjoyed this post. Stay safe, and I'll catch you all soon. Paul Colmer #TheDigitalCoach

0 Comments

😍G'day Beautiful People. Thanks again for reading this blog post. Today I'm going to talk about and demonstrate the usefulness of some Generative AI tools. Many of you will know that I am a practicing musician. I have a music degree and I'm a fellow of the London College of Music. I spent 3-4 years of my career in film and TV music. So it's a real pleasure to start combining my tech knownledge, with music, by choosing to specialise in Generative AI. 😎

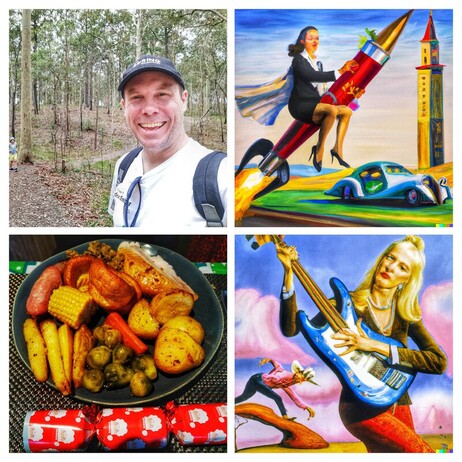

What is Generative AI? Generative Artificial Intelligence refers to artificial intelligence systems (known as AI) that are able to generate new content, such as text, images, or music, based on a set of examples. The examples that are used to train the system is known as training data. These systems use machine learning and deep learning\ techniques to learn the patterns and characteristics of the content they are trained on, and then use this knowledge to generate new, original content that is similar to the training data. Some examples of generative AI systems include text generators that can write news articles or generate social media posts, image generators that can create original images or photographs, and music generators that can compose new pieces of music. Perfect for speeding up the delivery of digital content and blog posts. 😂 But how good is Generative AI? Well, most of the first paragraph above, and much of this blog post has been generated using Generative AI. Feel free to compare what ChatGPT provided, versus how each section in this blog post is written. Clearly ChatGPT has a long way to go, to make blog posts engaging and interesting, so anything remotely funny, is probably my writing. 😎 I used a tool called ChatGPT from OpenAI. Check out the link here: chat.openai.com/chat Who is OpenAI? OpenAI is a research organization that focuses on the development of artificial intelligence (AI) technologies. It was founded in 2015 by a group of entrepreneurs and researchers, including Elon Musk, Sam Altman, Greg Brockman, and Ilya Sutskever. Everything that Elon Musk touches seems to turn to gold, so OpenAI is no exception. The goal of OpenAI is to advance the field of AI in a responsible and safe manner, and to ensure that the benefits of AI are widely and fairly shared. I think this is a very noble cause and I believe that all data scientists have a responsibility in the ethical and equitable use of AI amongst the human race. OpenAI conducts research in a variety of areas, including machine learning, robotics, economics, and computer science. It has developed a number of influential AI technologies, such as the GPT (Generative Pre-trained Transformer) language processing AI, and the DALL-E image generation AI. OpenAI also hosts conferences and events, and provides educational resources and tools for researchers and developers interested in AI. I'll talk about DALL-E further down the post, but let's first talk about ChatGPT! 🚀 Tell me more about ChatGPT? The GPT in ChatGTP, is short for "Generative Pre-trained Transformer". It is a type of language processing AI developed by a company called OpenAI. It is a large, deep neural network that is trained to generate natural language text that is similar to human writing. What is a Deep Neural Network? A deep neural network is a type of artificial neural network that is composed of many layers of interconnected nodes, or "neurons." These networks are called "deep" because they have many layers, as opposed to shallow networks that have only a few layers. Deep neural networks are designed to recognize patterns and relationships in data, and can be used for a wide range of tasks, such as image and speech recognition, natural language processing, and machine translation. They are particularly well-suited for tasks that require the learning and recognition of complex patterns, as the multiple layers of neurons allow the network to learn and represent these patterns in a hierarchical manner. Deep neural networks are trained using large datasets and algorithms that adjust the connections between neurons in order to minimize the error between the network's predictions and the true values in the training data. They are an important part of the field of deep learning, which has led to many significant advances in AI in recent years. What is a Generative Pre-Trained Transformer? Apart from being a mouthful, it is simply two things: Generative means that it produces content, rather than trying to predict something, which is a common use case for machine learning and deep learning models. Pre-Trained, means the model has been fed lots of great data. Bad data will produce poor results, so the latest version of ChatGPT has used humans in the training process to enhance the content. Transformer is a specific generative model that is used in natural language processing to help model and translate language, but used here to help generate new content based on a simple question. It is also used in music to extend melodies. Great example here: aws.amazon.com/blogs/machine-learning/using-transformers-to-create-music-in-aws-deepcomposer-music-studio/ Show me some more Generative AI examples? Check out some examples I have created used AWS DeepComposer here: soundcloud.com/morphosis And check out my blog post that I publised at Amazon Web Services here: aws.amazon.com/blogs/machine-learning/generate-a-jazz-rock-track-using-aws-deepcomposer-with-machine-learning/ Finally, 2 of the pictures at the top of this blog are created using another OpenAI product called Dall-E. Check it out here: openai.com/dall-e-2/ Have fun and try some experiements. Please feel free to share your creative projects with me on LinkedIn, Twitter and Instagram. Paul Colmer #TheDigitalCoach |

CategoriesAll Active Directory AI Architecture Big Data Blockchain Cloud Comedy Cyber DevOps Driverless Cars MicroServices Office 365 Scaled Agile Social Media AuthorPaul Colmer is an AWS Senior Technical Trainer. Paul has an infectious passion for inspring others to learn and to applying disruptive thinking in an engaging and positive way. Archives

May 2023

|

RSS Feed

RSS Feed