|

23/12/2021 0 Comments Tesla Model 3 WindScreen Wiper FixG'day beautiful people. It's almost Christmas. Sorry I haven't blogged for a while, but I've been super busy, doing incredible work in the cloud with AWS. But my Tesla Model 3 has inspired me to blog yet again, this time 1 day before Christmas Eve.

So...I have had a problem with my Tesla Model 3 windscreen wipers for about 3 weeks now. My partner was driving the car, and they just went berserk. Simple fix...turn them off. Well...that worked for the last 3 weeks because I haven't been out in the rain. But today...the heavens opened and I needed them. So after switching to Auto....they furiously wiped back and forth...even though to start with the rain was only spitting. I looked a quite a few forums and it is a common problem. Good thread here on the problem: teslamotorsclub.com/tmc/threads/wipers-dont-work-properly-in-auto.222547/ What is the fix? Make sure the centre front-facing windscreen camera is clean and so too must be your forward-facing side cameras. After cleaning mine...it worked a treat. Keep reading if you're interested to know why this fix most likely worked. Me being a curious person and wanting to understand how the wipers work, I dug a little bit deeper. Well they use the camera system, and a clever piece of technology called Artifical Intelligence (AI), to detect whether there is rain on the windshield and they look for other visual weather cues. From my research and knowledge of the Tesla Model 3, and my knowledge of AI netural networks, I have deduced the following: The camera that is looking out at the windscreen, which is positioned between the driver and passenger on the front windscreen, is most likely detecting the rain, and the front facing left and right cameras are looking for weather cues. The 3 cameras are fed data in a lab somewhere in Tesla. The data consists of real-world vision that shows that the weather is raining. Lots of different types of data needs to be fed into the cameras, so that the AI, well neural network to be precise, can LEARN how to detect rain. This is known in data science as TRAINING. The neural network is really a mathematical representation of an algorithm, that can learn to do new things. The type of algorithm is called a neural network. A neural network is a type of algorithm that mimcs how the human brain works. The word neural refers to neurons, which are small biological systems in the brain that help us all learn. By replicating a similar technique using mathematics, machines can be taught to learn, just like humans. If you remember the stories about AI beating Lee Sodol in the game of Go, that's the same technique. Anyway...once the neural network is trained the output is a model. The model is then tested by feeding more live data, to ensure that it functions correctly. It is doesn't function in all scenarios, that new learnings are fed back into the original neural network and new models can be TRAINED again to produce better results. This process usually takes weeks or months, depending on the complexity of the problem being solved. If you'd like to learn more about neural networks, check out this awesome article: www.explainthatstuff.com/introduction-to-neural-networks.html And if you'd like to have a play with neural networks, also known as Deep Learning, you can stuck in here with AWS: aws.amazon.com/deep-learning/ Merry Christmas

0 Comments

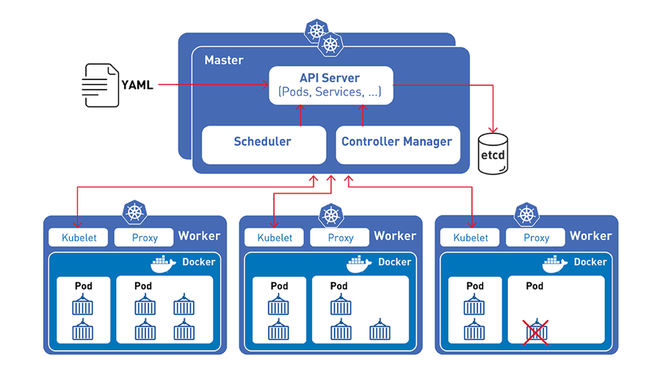

G’day from the land that gave you kangaroos, koalas, redback spiders and Paul Hogan….sorry about that! So….what is Kubernetes. Kubernetes is an open-source orchestration platform that helps manage containers at enterprise scale. Typically a large enterprise (say more than 1000 employees), tends to put a lot of stress onto the underlying technologies that are delivering business value. These stresses include: • Scaling resources and services to meet demand. • Providing extremely high availability of services to customers. • Being able to reconfigure the components on the fly, in an instance, in real-time to provide business services with shapechanger capabilities. Can Kubernetes keep up? Well….When you’re managing more than 50 containers, there are five key functions in Kubernetes you should probably know about: 1. Schedulers help with the heavy lifting and intelligently assigning the work and monitoring the container health. 2. Service discovery helps keep track of all the services that are needed and monitors the health. 3. Load balancing services allow architectures to shapechange whilst maintaining service stability. 4. Resource Management of the CPU, memory, disk and network components to ensure workloads are correctly sized. 5. Self healing features automatically detect, kill and restarts failed instances. The biggest inhibitor for Kubernetes adoption, is the complexity of learning and using the service to achieve those business outcomes. There are some great examples of cloud computing platforms that can kickstart your Kubernetes journey and simplify some of this complexity: Microsoft Azure Kubenetes Service (AKS) - azure.microsoft.com/en-us/services/kubernetes-service/ Both AWS and Microsoft provide all of the infrastructure needed to run Kubernetes, as well as crystal clear documentation on container management best practices. Have Fun!!!! P I hope you are all staying safe in this unique period of coronvirus lockdown. To take your mind off all things viral, I'm going to talk about the basics of Amazon Web Services and a service called Elastic Compute Cloud or EC2 for short. The Amazon EC2 service offers a way of running up virtual machines in minutes. Each virtual machine is billed per minute and you can terminate them at any time. There are 4 flavours to choose from:

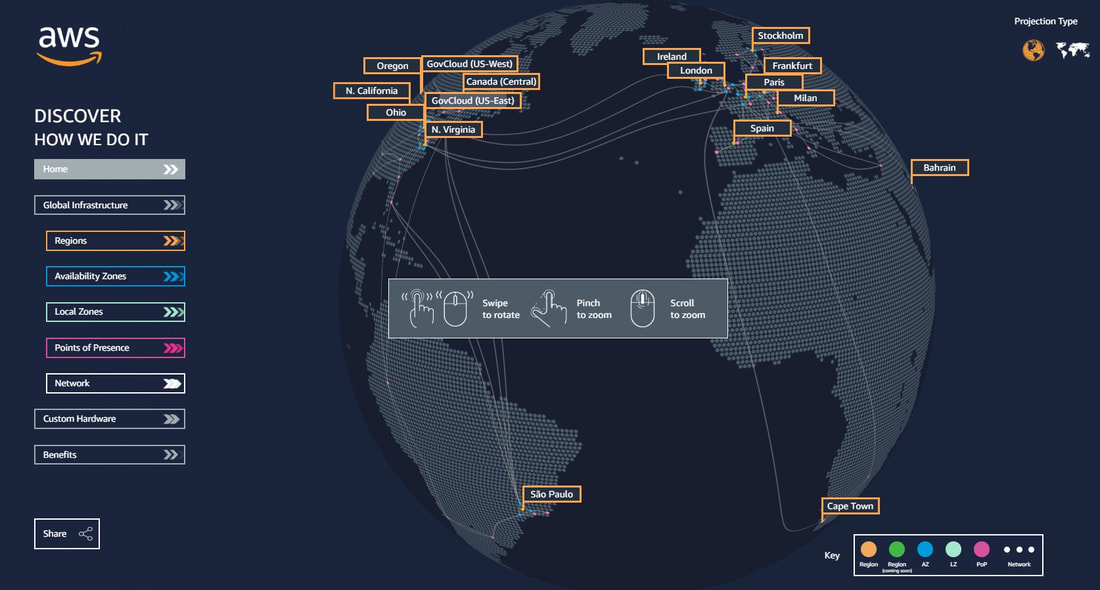

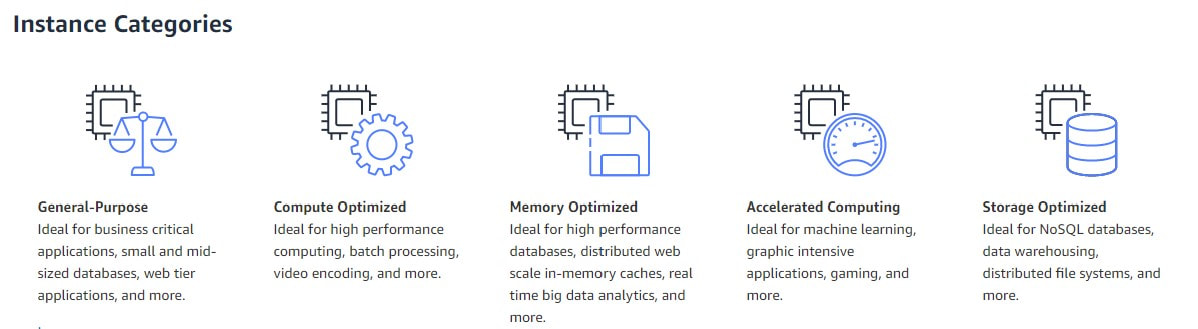

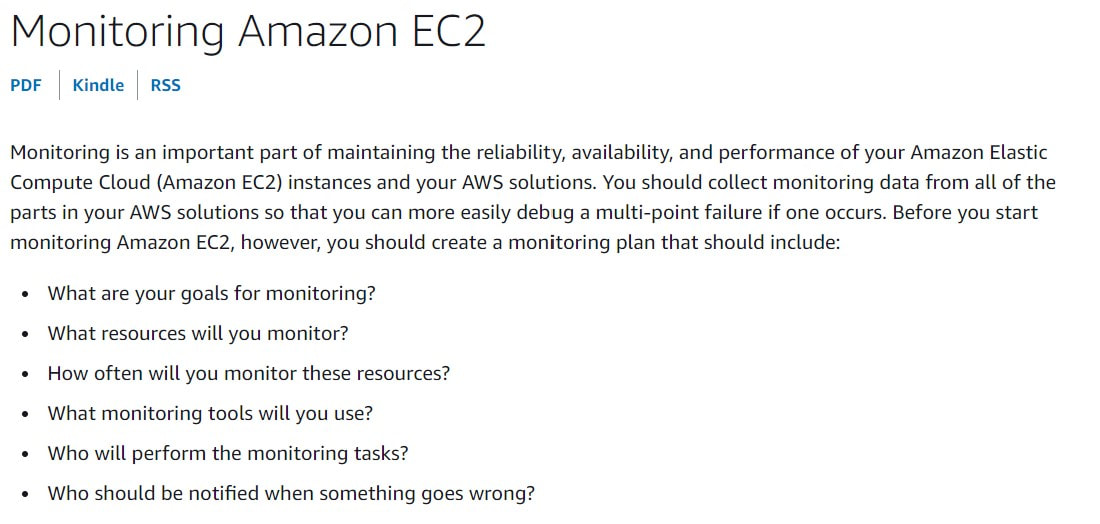

Once you have decided on your instance aligned to your commercial model, you can look at what type of instance you want and where you want to host it. There are many locations to choose from. Click on the image below to take you to the interactive map: There are quite a few instances to choose from, once you know the location. Here are the most common categories: Once you have selected your instance category, you'll need to then pick an EC2 specification. Don't sweat it at this stage....try out a few specs. Measure their performance and price over a few days and then you can select the one that meets your needs. Just be sure that when you're monitoring your EC2 instances, you are making the instances work as normal. We call this first set of measurements a baseline. And we can use it to measure what "normal" looks like. Click on the link below to understand best practices around baselinining: Deviations to this baseline can indicate abnormal activity. Things like an unexpected peak in demand from our users, or worse still an indication of a malicious attack. Either by a person, via a bot or via some form of malware.

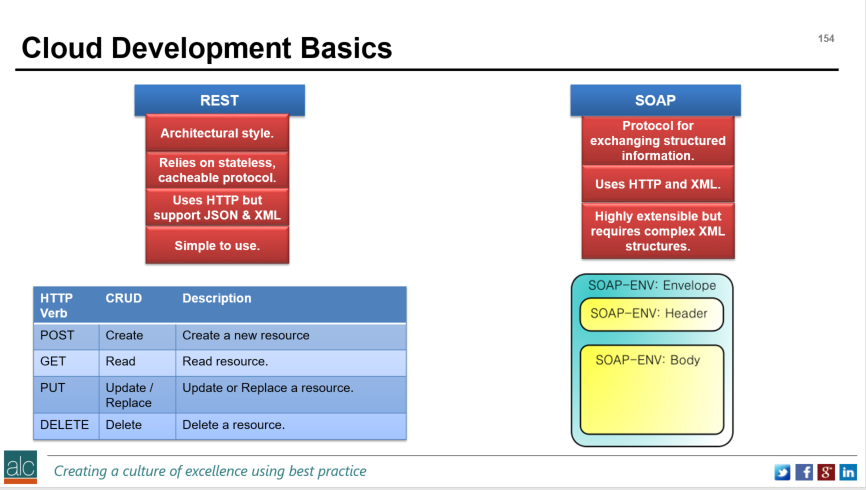

I hope you enjoyed my blog post. Take care and stay safe. P I hope everyone is staying safe and making the most of their time indoors. Today I am rediscovering the beauty and elegance of Amazon Web Services or AWS for short. AWS provide a cloud computing platform that allows you to build apps and infrastructure to deliver business outcomes. Today, my session is going to focus on a very basic service called Elastic Compute Cloud or EC2 for short. It is a simple service that allows you to build your own virtual machines or VMs. These VMs are the most basic building block in cloud computing. Below is a fantastic Zoom video, that steps you through some of the basics of AWS EC2 and provides you with a simple hands-on demo on the AWS platform. I really hope you enjoy it. I'm consistently seeing twitter feeds with #Microservices embedded in the tweets. So, what are microservices, what value do they add to my business and how do I secure them, if I decide to adopt them? MicroServices Firstly, microservices and REST, are architectural approaches to developing code. So, let me cover what REST is, and then microservices becomes a little easier to understand. In the Certified Cloud Security Professional course that I run, there is a specific section under Cloud Application Security, where the learning outcome is to describe specific cloud application architecture and to understand the application programming interface (API) structures. Below is a diagram that outlines the 2 types of APIs that we consider in the course: To summarise, SOAP is a protocol for exchanging structure information, whereas REST is an architectural style that relies on stateless, cache less protocols. Put simply REST is a way of Creating (using the POST verb), Reading (using the GET verb), Updating (using the PUT verb) and Deleting (using the delete verb).

Let's extend this concept a little further and we'll start to understand Microservices. With REST, we can see that the commands, or verbs, are very simple. This means it's easy to create small independent services. Principle 1 of Microservices. By ensuring that our APIs are clearly documented and follow this RESTful style, we form the basis of creating well-defined, lightweight APIs. Principle 2 of Microservices. And finally, by using REST, i.e. POST, GET, PUT, DELETE we are using an HTTP resource API. Principle 3 of Microservices. Let's expand out those 3 key principles and explore what they mean: P1 - Create Small Independent Services. Independence means that we do not need a centralised datastore, instead the data is distributed and when we change one of our services, it does not require other services to be changed. This is referred to as loose-coupling. Small means that each we focus on a very small set of capabilities that focus on a specific domain. Ideally a single capability. P2 - Create well-defined, lightweight APIs. Well-defined means that I clearly document how to call the API. Lightweight referring to the small range of functions that I can execute to achieve my outcome. P3 - Using an HTTP resource API where possible. This means we are using HTTP commands, i.e. POST, GET, PUT DELETE in a RESTful manner. Here is a piece of code below that follow the 3 principles using AWS. It deletes a jpeg picture file, called dodgy picture, from storage on AWS. The storage is simple storage service, or S3 for short, and we refer to the container that holds the jpeg, as a bucket: DELETE /dodgy_picture.jpg HTTP/1.1 Host: examplebucket.s3-us-west-2.amazonaws.com Date: Mon, 11 Apr 2016 12:00:00 GMT x-amz-date: Mon, 11 Apr 2016 12:00:00 GMT Authorization: authorization string In this case, we can see it is a Small Independent Service, it uses an HTTP resource API, it is lightweight and it is well-defined, as exemplified by the AWS S3 documentation: http://docs.aws.amazon.com/AmazonS3/latest/dev/UsingBucket.html We can also do the same on Azure. Again, the documentation around Azure Blob storage shows the APIs are well-defined: https://docs.microsoft.com/en-us/rest/api/storageservices/blob-service-rest-api Security If we look to the certified cloud security professional (CCSP) best practices, we can see some common themes that apply to microservices: Secure APIs To provide unauthorised access to the data, leading to data loss, data breach or service hijacking, it's best to implement a method of ensuring that the API calls you receive are authenticated. In other words, they are authorised to make the call and access your data. This can be achieved using an API gateway service, such as AWS API Gateway or Azure API Management, most likely linked to a directory service, such as Microsoft Active Directory or AWS Cloud Directory service. Protect Data To protect the data in transit between the two API calls, it's worth considering virtual private network (VPN) technologies, such as Azure VPN Gateway or AWS VPN CloudHub. To protect data at rest you can use encryption. Encryption requires the exchange of keys and the keys need to be secured in a safe place. Remember if the location is too safe, and you cannot find the keys, you cannot unencrypt the data. Which means you're prone to data loss. Data in Motion Azure VPN Gateway / AWS VPN CloudHub Data at Rest Azure Storage Service Encryption / AWS S3 Server-Side Encryption Key Management Azure Key Vault / AWS Key Management Service / AWS CloudHSM Monitor Enable application logging on all your API's so you can see that they are behaving as required. Logging at the infrastructure level can also reveal vulnerabilities that may affect the way APIs are called. At both levels, you should be centralising all your logs into a SIEM (Security Information and Event Management) system. SIEM Azure Log Integration / Azure Operations Management Suite - Log Analytics / AWS Kinesis / AWS ElasticSearch The OWASP Top 10 outlines application best practices to avoid common vulnerabilities that can be introduced as part of a software development lifecycle. There are various tools that can be used to help detect the OWASP vulnerabilities: OWASP Detection Tools Amazon Web Application Firewall / Azure Application Gateway Vulnerability Assessment Azure Security Centre / Azure API Management AWS Inspector / AWS Vulnerability / Penetration Testing Business Value By adopting the 3 principles we outlined above and incorporating the key security best practices, we can clearly see that we're helping the business in many ways: Faster Value Adopting a microservices architecture, allows us to develop services quickly and scale them as required. When this is\ combined with agile storyboarding and agile prototyping techniques, our clients can clearly see tangible results within weeks. Higher Efficiency The probability of wastage decreases rapidly by adopting a microservices architecture, since we are focused on creating functions that our clients absolutely need. By enabling secure API communications coupled with logging, we can provide quality assurances back to the business using analytics. We also have the freedom to fail-fast which means we can innovate and try out new things. And because our architecture is comprised of small, loosely-coupled components, we can roll-out new versions or releases of services, in a DevOps manner, on a weekly basis, rather than performing this on a monolithic scale every few months or years. Finally, we can take microservices further by utilising serverless architectures, such as AWS Lambda or Azure Functions. This reduces the need for our developers to worry about the size and scale of the virtual machine and storage infrastructure and instead focus on writing reliable, scalable and easy to understand code. Another concept we can utilise is containerisation, using Docker, which effectively builds us a consistent environment that we can port between test, pre-production and production without introducing those nasty regressions, or unintended bugs. Docker manifests itself as AWS EC2 Container service or Azure Container Service. Both serverless architectures and containerisation concepts are blog posts, so I’ll cover them in future blog editions. What are you waiting for? Let's move to microservices…. |

CategoriesAll Active Directory AI Architecture Big Data Blockchain Cloud Comedy Cyber DevOps Driverless Cars MicroServices Office 365 Scaled Agile Social Media AuthorPaul Colmer is an AWS Senior Technical Trainer. Paul has an infectious passion for inspring others to learn and to applying disruptive thinking in an engaging and positive way. Archives

May 2023

|

RSS Feed

RSS Feed